Application Details

EyeControl

From 2016 till 2019

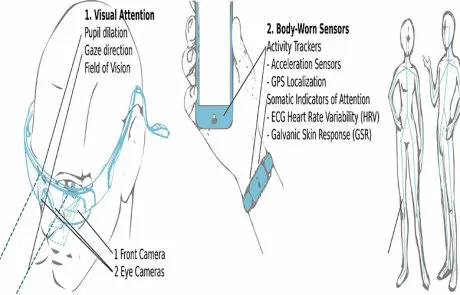

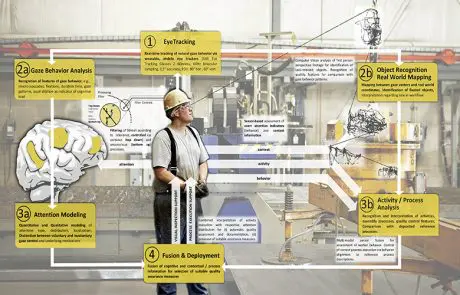

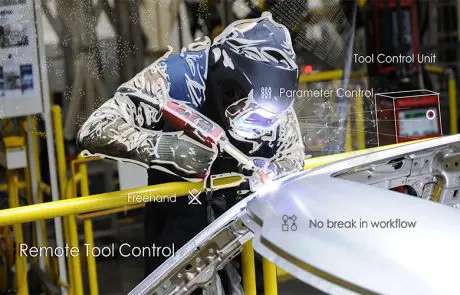

Eye-Gaze research has a long tradition in medical and psychological research in areas such as visual perception, attention, modelling and analysis of cognitive load, but also non-verbal communication and the study of social behaviour in general. With the availability of new eye-tracking technologies (e.g. 500 HZ Tracking, mobile tracking platforms), Eye-Gaze research is now rapidly gaining momentum in Pervasive and Ubiquitous Computing, Wearable Computing, and in perception and attention-related IT in general. While research questions have so far mainly dealt with eye tracking (usability studies such as the effectiveness of advertising subjects or the catchability of websites), gaze-based interaction with computer applications (video games), the assessment of attention or cognitive bonding of the viewer, the project proposal EyeControl takes up the question of the suitability of eye contacts as an explicit and implicit means of interaction between humans and complex industrial systems, which has not been dealt with so far. EyeControl is based on the observation that humans look at (focus on) objects and devices before they interact with them. It is obvious to make this visual pre-interaction inspection itself the trigger for operations on the focused devices. EyeControl therefore aims at interaction modalities in which the gaze itself triggers intended actions with industrial machines and equipment.

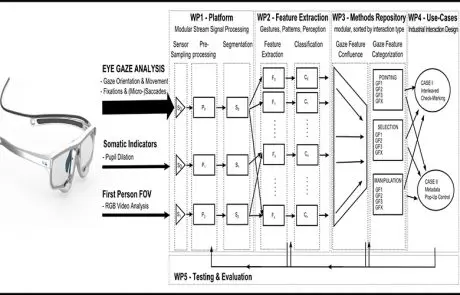

On the way to gaze-based interaction (control) with complex industrial equipment, the applicant can build on eye-tracking approaches developed within the research group, such as opportunistic gaze measurement, real-time acquisition of attention and cognitive load based on fixations and saccades, and on/off switching by eye contact. EyeControl is diametrically opposed to traditional augmented and mixed reality solutions for human-machine interaction in industrial production. Within the scope of the project, a set of methods, mapped in universal, generally valid and reusable control components (pointing, selecting, manipulating, etc.) is developed. As a collection of plug-and-play modules, it is intended to simplify the configuration of gaze-based controls in a wide range of industrial applications (construction, maintenance, assembly, repair, quality assurance, etc.).

Technologically, EyeControl builds on mobile eye-tracking sensors to (i) analyze gaze behavior in real time, (ii) evaluate perception and awareness based on cognitive models of factory workers, and finally (iii) trigger explicit and implicit interaction on industrial equipment and machines. EyeControl research results will be validated in scenarios Cyber-Physical System Validation (Industry 4.0), and practically implemented in cooperation with Austrian and European world market leaders in industrial production, voestalpine Stahl and voestalpine Polynorm - among others to (i) optimize product quality by human visual inspection, and (ii) attention and process guided interaction in complex assembly activities. Furthermore, the intended results provide a fundamental basis for human-system interaction in many application areas such as medical technology, maintenance technology, operations management, etc.